|

What is multi-modal information processing ?

In order to perceive external information more accurately,

humans use the combination of various senses, namely five

senses and somatosensation (sense of equilibrium etc.) to

process the received information.

This information processing

is called multi-modal information processing. The knowledge

about multi-modal information processing is very useful when

wanting to create virtual reality applications.

The aim of

our research is to clarify the aspects of the multi-modal

information process related to hearing.

Fig. What is Multi-modal Information Processing ?

McGurk effect

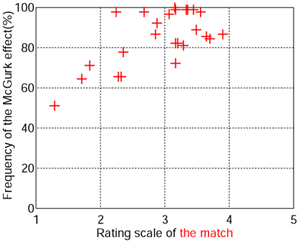

The McGurk effect is a well-known phenomenon of multi-modal interaction between the senses

of vision and hearing relating to speech. It is an interesting phenomenon where we perceive

the sound "/t/" from each syllable even though in reality we are presented with the image of a

speaker saying a "/k/", and the sound of a speaker saying a "/p/". To study the effect further,

we considered the relation between a speaker's audio-visual characteristics and the McGurk effect.

The audio-visual stimuli were comprised of five speaker's face and voice that individually added up. The

test subject was then asked about his sense of "matching", that is how well the mixed

face and voice fitted together.

The result showed the frequency of the McGurk effect increased as the rating scale of the match increased.

Fig. The relationship between the McGurk effect and the talker’s audio-visual characteristics.

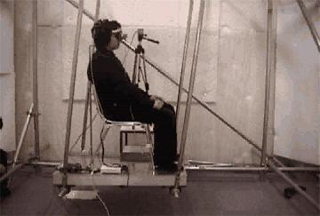

Self-motion perception

produced by acoustic information

When moving, we perceive a certain self-motion.

We studied the interaction between self-motion

perception and acoustic information.

The results suggested that self-motion perception was

influenced by not only visual information but also acoustic information.

To analyze this relationship in more detail,

we investigated whether the direction of the perceived self-motion was

modified toward a moving sound image.

In addition, we examined whether AM and FM sounds affect the perceived

magnitude of backward-forward self-motion.

The results indicated that the amount of perceived self-motion was

modified by the movement of frequency or amplitude of the sounds.

Fig. The equipment(left) and the image model of the result(right).

Fig. An experiment participant mounted with Head-Mount Display and Virtual Auditory Display

(Click on the image to view experiment)

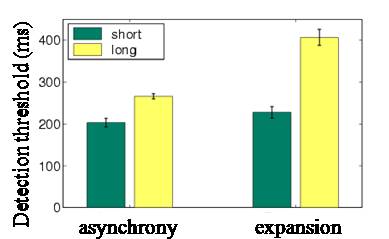

The process of audio-visual

speech perception

We investigate how observers use the information of a talker's dynamic face

image when they hear and understand speech signal. As a stimulus, we use

a time-expanded speech signal and combine it with the original moving image of the speaker's

face. The results suggest that when the onset of both signals are synchronous, visual

information is effective to understand speech signal, even if the offset of both

signals are asynchronous. In addition, the results suggest that detection and

tolerance thresholds of audio-visual asynchrony might depend on the ratio of

expansion rate to word duration.

Fig. The detection thresholds of asynchrony when visual and audio

signals are desynchronized (left) and when only the audio signal is expanded (right)

Fig. The detection threshold of asynchrony as a function of the ratio to word length

|